Documents

Poster

SpatialCodec: Neural Spatial Speech Coding

- DOI:

- 10.60864/s492-cs81

- Citation Author(s):

- Submitted by:

- Zhongweiyang Xu

- Last updated:

- 6 April 2024 - 5:23pm

- Document Type:

- Poster

- Document Year:

- 2024

- Event:

- Presenters:

- Muqiao Yang

- Paper Code:

- AASP-P5.7

- Categories:

- Log in to post comments

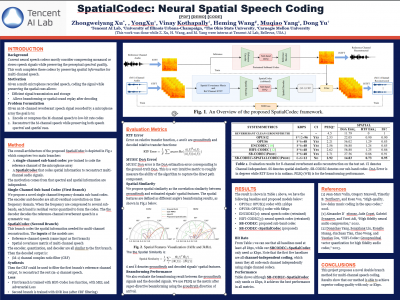

In this work, we address the challenge of encoding speech captured by a microphone array using deep learning techniques with the aim of preserving and accurately reconstructing crucial spatial cues embedded in multi-channel recordings. We propose a neural spatial audio coding framework that achieves a high compression ratio, leveraging single-channel neural sub-band codec and SpatialCodec. Our approach encompasses two phases: (i) a neural sub-band codec is designed to encode the reference channel with low bit rates, and (ii), a SpatialCodec captures relative spatial information for accurate multi-channel reconstruction at the decoder end. In addition, we also propose novel evaluation metrics to assess the spatial cue preservation: (i) spatial similarity, which calculates cosine similarity on a spatially intuitive beamspace, and (ii), beamformed audio quality. Our system shows superior spatial performance compared with high bitrate baselines and black-box neural architecture. Demos are available at https://xzwy.github.io/SpatialCodecDemo. Codes and models are available at https://github.com/XZWY/SpatialCodec.